How big is big data? Huge.

IBM estimates that we create 2.5 quintillion bytes of data each day and, by its math, 90 percent of all the data in the world today has been created in the last two years.

But big data, as a concept, is nothing new. The work of analyzing, sorting, comparing, and simulating data on a large scale is what supercomputers have done for decades in cryptography and weather forecasting. What’s different now is that everything happens faster. More people using more devices produce data more quickly in more ways. The answers you get from comparing gigantic sets of data are now needed just as much by CEOs as academics and R&D labs.

And the answers are needed fast. In a worldwide logistics company, identity verification can prevent costly delivery mistakes. Homeland security might be literally looking for a face in a crowd. And data-driven drug makers might be able to fight cancer more effectively by only targeting specific genes with medication.

If the concept of big data is nothing new, neither are the guys determined to take on the challenge. In Richardson, six-year-old Convey Computer is loaded with high-tech veterans who have been performing computing heroics for years.

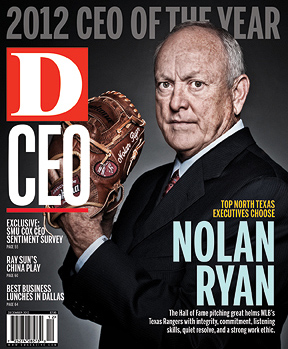

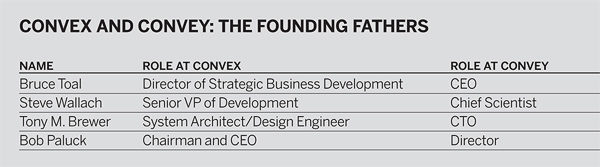

CEO Bruce Toal, Chief Scientist Steve Wallach, and CTO Tony M. Brewer started Convey; Bob Paluck, a director at CenterPoint Ventures, invested and took a seat on the board. More investors, like Intel Capital and Xilinx Inc., stepped up as well. The company has raised more than $40 million to date, taking its last funding round in 2009. Convey shipped its first product that year, debuted its second-generation systems in April.

Last November, though, the company revealed its new computing architecture aimed at taming big data. And to appreciate where these guys are going, it helps to recall what they’ve done.

Convey is just one letter off from one of the most celebrated names in computing: Convex Computer Corp.

Founded in 1982, Convex put its stamp on tech history by creating sub-$1 million supercomputers and taking on giants like Cray Research, IBM, and Silicon Graphics Inc. Toal says Convey has a strong link to the past. “We’ve put the band back together,” he says. Toal, Wallach, Brewer, and Paluck are all former Convex executives—“ex-Cons,” as they say.

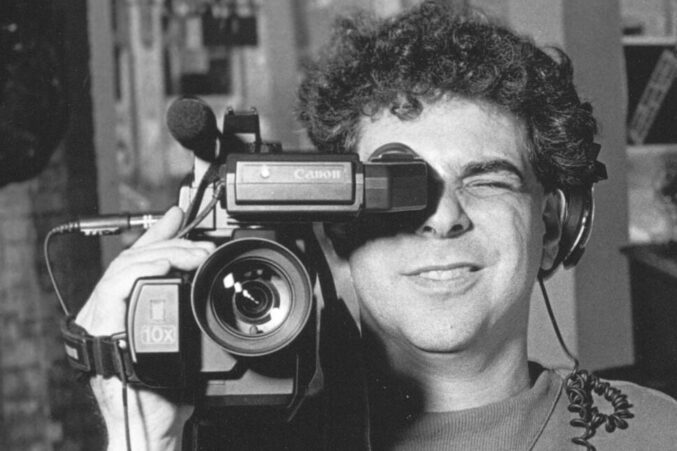

Richardson-based Convex, which employed around 1,100 people at its peak, had a “work hard, play hard” culture that was remarkable. It held annual beach parties where contractors would cover the company parking lot with 200 tons of sand. One year, then-CEO Paluck and his top VPs took a dive into a 72-gallon washtub of raspberry Jell-O, a celebratory suggestion that came from Convex employees. Fallen competitors were mocked and memorialized outside one of the company’s patios where their names were etched in concrete tombstones.

As technology evolved, Hewlett-Packard bought Convex in 1995. When competitor SGI fi led for bankruptcy, HP brass frowned on the idea of having a ceremony where a backhoe would bury a huge SGI machine in the campus yard. “I would have done it in a heartbeat,” Toal says, folding his arms.

Convey, as of September 2012, employs 52 people. Toal says 40 of those employees are engineers, and about 60 percent of the group worked at Convex back in the day. Toal compares the assembled team to a group of musicians that have played together for a long time. “We know each other’s strengths and weaknesses,” he says.

Four Target Markets

Convey is chasing four markets that require what Toal calls “data intensive computing”: life sciences, government, research, and big data. It may seem odd for a specialized computer firm to chase four markets at once, but that’s what makes Convey unique. Toal says the company’s goal has always been to “create the kind of performance you can get for application-specific computing, but make it flexible enough that we can target more than one market.

“Whenever you can use every bit of a transistor for solving a problem, you get better performance and lower power consumption,” he says.

By “application specific,” Toal is referring to one of the great trade-offs in high-performance computing (HPC). Many supercomputers are simply a cluster of several racks of servers with x86-based chips, the kind used in standard PCs.

These machines are widely available, but getting several servers to work together as one coherent system running a single application is tough. Also, most HPCs are fine-tuned to handle specific types of calculations, like, for instance, protein sequencing and matching.

The problem? Creating a more powerful HPC in that paradigm means adding racks and racks of servers, which can drive up the cost of keeping the lights on and the air conditioners blowing.

Indeed, creating more powerful machines that use less power is its own challenge. “In tracking spending in HPC, the percent of the average HPC budget going to facilities costs has increased every year for the last five years,” says Addison Snell, president and CEO of Intersect360 Research, which monitors the HPC industry.

Hybrid Computing

Convey’s computing architecture, designed by Wallach, also uses standard Intel processors. But those chips are tightly coupled with Convey’s coprocessor, which is based on standard Field Programmable Gate Arrays (FPGAs). As the name suggests, FPGAs can have their programming changed after they’ve been installed in a computing system.

That blending of two different types of processors sounds like it would create a programming nightmare, but it doesn’t. But the integration allows for the separate memories of the Intel chips and the FPGAs to be viewed and treated as a single instance of computer memory.

“They have essentially tailored the system for applications where a substantial portion of the calculations are offloaded to the FPGAs, thus easing the burden of x86 processors,” Snell explains. Toal says that means that the software development for Convey’s computers is as easy as that of a standard x86 environment.

Integrating standard parts in a way that provides a boost in computing and lowers power consumption, with no additional programming complexities, is Wallach’s calling card. “It’s not necessarily a secret,” Toal says. “Anybody could do it. But it’s really, really, really hard.”

The integration between those two chip types allows Convey’s systems to take on different “personalities” to handle the tasks related to the company’s four target markets. The personalities are sets of instructions that program the hardware to accelerate applications. Proteomics research has its own distinct personality. Speech recognition and military applications have theirs, too.

With “personalities,” Convey can ship the same hardware to, say, the Defense Department, as it would to a genetic research lab. Also, a Convey customer could perform different algorithms at different times using the same system. Nothing physically changes at all.

In each case, Convey is not wringing a little more speed out of a common computing system; it is changing the way the information is digested and used, which gets a faster result while using less storage and power.

The Next Big Thing

Wallach says Convey’s newest computing architecture, code-named “Fox,” is designed specifically to handle the characteristics of big data. These are problems with complex, often unstructured, data types and problems that require nearly instant answers.

These big data problems that require a more flexible, scalable computer, include “risk analytics in financial markets, fraud and error detection across a wide range of markets, or catching terrorists before they leave the airport,” according to market researcher IDC, in a paper on Convey’s computing architecture.

“There are a lot of different dimensions to big data,” Snell says. “Sometimes the size of the file is huge. Sometimes the number of inputs is huge… If you’ve got this type of architecture that Convey has, there’s a huge opportunity to find more areas that are in, or beyond, the areas of high-performance computing affected by big data.”

To tackle this, Convey’s Fox platform cuts down on the number of trips data must make between the already tightly-integrated processor and coprocessor to make calculations. By putting more intelligence in the computer’s memory, Convey has found a way to complete fewer round trips per instruction set. Fewer trips means the system can handle more simultaneous threads, or small sets of programmed instructions.

Convey’s new machine, Toal says, can handle 4,000 simultaneous threads; its current systems can handle “around 1,000” threads. This translates to a tenfold increase in system performance in the new system, which is also able to hold up to four terabytes of shared system memory.

With a high-performance computing architecture that speeds up applications and provides a new level of flexibility, Convey has more than a little in common with its ancestor. “That’s our goal,” Toal says. “Become another Convex and grow up and keep having fun.

“We were blessed at Convex to have some of the brightest people working together. Since working for a larger company, you realize that was a really unique situation,” he says.

Snell notes that Toal, Wallach, and the other “ex-Cons” are competitive and driven to solve bigger and bigger problems—a common trait among the HPC set. “In enterprise computing, if it works, you don’t touch it. In HPC, if it works, its no longer interesting,” he says. “Presumably Bruce has a ‘Convez’ in him somewhere along the way.”